How To Add Dynamic Resource Allocation In Pyspark

Defining the universal workload and associating corresponding resources is always difficult. Even if most of time expected resources will back up the load, in that location e'er will be some interval in the year when data activeness volition grow (e.g. Blackness Friday). One of Spark'southward mechanisms helping to forestall processing failures in such situations is dynamic resources resource allotment.

New ebook 🔥

Acquire 84 ways to solve common information applied science issues with cloud services.

👉 I want my Early Admission edition

This post focuses on the dynamic resource allotment feature. The kickoff role explains information technology with special focus on scaling policy. The 2d part points out why the described feature needs external shuffle service. The last part contains a demo showing how resources are dynamically allocated.

Dynamic resource allocation

By default, resources in Spark are allocated statically. Information technology can atomic number 82 to some problematic cases. Above all, it'due south difficult to guess the exact workload and thus define the corresponding number of executors . It tin produce 2 situations: underuse and starvation of resources. The offset one means that likewise many resources were reserved only only a small part of them is used. The second instance means that one processing takes all available resources and prevents other applications to start.

Spark executors

An executor in Spark is a long running unit of measurement of processing (JVM) launched at the start of Spark application and killed at its stop. Information technology'due south equanimous of CPU and retention that tin be defined, respectively, in spark.executor.cores and spark.executor.retentiveness properties. This unit of processing is persisted, i.e. it'south non destructed and recreated on every executed job.

The executors shouldn't be confounded with the thought of workers. In fact, two or more executors can exist launched on the same worker.

Dynamic resource allocation is one of solutions for above issues. It adapts resources used in processing according to the workload. This feature is controlled by spark.dynamicAllocation.enabled configuration entry. And thanks to other parameters we can specify the initial/minimal/maximal number of executors (spark.dynamicAllocation.(initialExecutors|minExecutors|maxExecutors).

Abreast parameters listed previously, Spark has some other configuration parameters helping to define scaling policy:

- scaling upward - each Spark task can be executed immediately or non. In this second case, it goes to the pending state. If some of these task remain in pending land for more than the time divers in spark.dynamicAllocation.schedulerBacklogTimeout, a request for calculation more than executors will be sent (of course, merely if dynamic allocation is enabled). Later on sending the starting time asking of this type, the subsequent requests are sent every spark.dynamicAllocation.sustainedSchedulerBacklogTimeout. Past default, this value is the same as in the case of schedulerBacklogTimeout. The number of added executors in each round of requests grows exponentially (one, two, 4, 8...). The exponential growth is adapted for 2 situations: when there are not many needed executors (1, two, 4) and when there are a lot of them (heavy workload, 8)

- scaling down - but the executors tin as well be given back to the cluster if the workload decreases. In this situation some of them won't treat any data, so they will pass to idle country. The parameter spark.dynamicAllocation.executorIdleTimeout defines the adequate idle state for each executor. If in that location are executors remaining idle for more than than this duration, they are removed. Withal, if the executor to remove stores some blocks in its cache, it won't be destroyed immediately but after the fourth dimension specified in spark.dynamicAllocation.cachedExecutorIdleTimeout parameter (of grade, only if information technology's still idle later this elapsing).

External shuffle service

Still, it's non enough to enable dynamic resource allocation through the configuration described in the previous section. In additional, the external shuffle service must be activated (spark.shuffle.service.enabled configuration entry). org.apache.spark.ExecutorAllocationManager that is a course responsible for the dynamic resource allocation, tells explicitly why the external shuffle service is needed:

// Crave external shuffle service for dynamic allocation // Otherwise, we may lose shuffle files when killing executors if (!conf.getBoolean("spark.shuffle.service.enabled", false) && !testing) { throw new SparkException("Dynamic allocation of executors requires the external " + "shuffle service. Y'all may enable this through spark.shuffle.service.enabled.") } Thanks to the external shuffle service, shuffle data is exposed outside of executor, in separate server, and thus tin survive after the removal of given executor. In issue, executors fetch shuffle data from the service and not from each other.

Dynamic resource resource allotment instance

To test the dynamic resource allocation, the post-obit lawmaking snippet will be used:

object Main { @transient lazy val Logger = LoggerFactory.getLogger(this.getClass) def main(args: Assortment[String]): Unit = { // Activate speculative task val conf = new SparkConf().setAppName("Spark dynamic allocation demo") .set("spark.dynamicAllocation.enabled", "true") .set("spark.shuffle.service.enabled", "truthful") .set up("spark.dynamicAllocation.initialExecutors", "one") .set("spark.dynamicAllocation.executorIdleTimeout", "120s") .set("spark.dynamicAllocation.schedulerBacklogTimeout", "1s") val sparkContext = new SparkContext(conf) Logger.info("Starting processing") sparkContext.parallelize(0 to 5, v) .foreach(item => { // for each number expect 3 seconds Thread.slumber(3000) }) Logger.info("Terminating processing") } } Equally you tin can run into, it blocks task executions for 3 seconds. According to the defined configuration, it should claim additional executors because of ane second excess timeout. The code was compiled with sbt package and deployed on my Dockerized Spark Yarn cluster composed of 3 workers. The application was launched with the following command:

spark-submit --deploy-way cluster --master yarn --jars ./shared/spark-dynamic-resource-allocation_2.xi-i.0.jar ./shared/spark-dynamic-resource-allocation_2.11-1.0.jar --class com.waitingforcode.Main

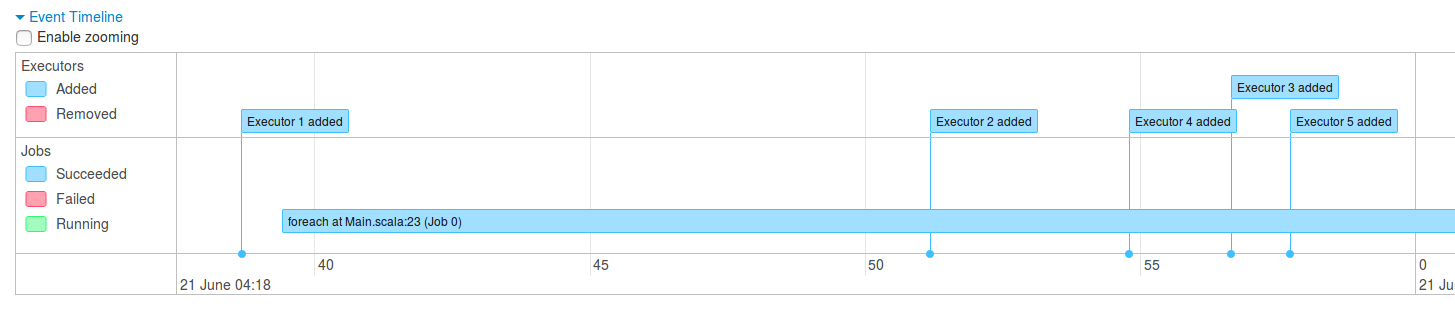

The allocation of additional executors can be observed in below paradigm representing "Event timeline" part from Spark UI:

The action of the dynamic resource allotment is also visible in the logs:

DEBUG ExecutorAllocationManager: Starting timer to add executors because pending tasks are building up (to expire in 1 seconds) DEBUG ExecutorAllocationManager: Clearing idle timer for 1 because it is now running a task INFO YarnAllocator: Driver requested a total number of 2 executor(s). DEBUG ApplicationMaster: Number of pending allocations is 0. Slept for 1472/3000 ms. INFO ExecutorAllocationManager: Requesting 1 new executor because tasks are backlogged (new desired total will exist 2) DEBUG ApplicationMaster: Sending progress INFO YarnAllocator: Volition request one executor container(s), each with 1 core(s) and 1408 MB memory (including 384 MB of overhead) DEBUG AMRMClientImpl: Added priority=1 DEBUG AMRMClientImpl: addResourceRequest: applicationId= priority=1 resourceName=* numContainers=1 #asks=1 INFO YarnAllocator: Submitted 1 unlocalized container requests. DEBUG ExecutorAllocationManager: Starting timer to add together more than executors (to expire in one seconds) INFO YarnAllocator: Launching container container_1498015239683_0008_01_000003 on host spark-slave-3 INFO YarnAllocator: Received ane containers from YARN, launching executors on 1 of them.

Spark offers the dynamic resources resource allotment equally the solution for resources misuse. It helps to avoid the situation where the cluster composition doesn't fit to the workload. Thanks to the dynamic allocation, enabled with appropriated configuration entries, Spark asks for more resources when the workload increases and releases them once it decreases. Equally shown in the second section, one prerequisite is demanded. To use the dynamic resources allocation, the external shuffle service must be enabled. Thank you to information technology, shuffle files won't be lost after executor's decomission. The final function demonstrated the dynamic resources allotment in activeness. Based on simple thread sleep trick, we were able to see how ExecutorAllocationManager requested for more executors because of as well many idle tasks.

How To Add Dynamic Resource Allocation In Pyspark,

Source: https://www.waitingforcode.com/apache-spark/dynamic-resource-allocation-spark/read

Posted by: lyonsupor1988.blogspot.com

0 Response to "How To Add Dynamic Resource Allocation In Pyspark"

Post a Comment